Category Archives: Stage 2

IDAT211 – Rendering

The render time was approximatly 10 hours for the 3000 frames at 1400 x 1050, saving each as JPEGs.

Render 1

My original plan was to directly convert the sound data to the path of the camera. The effect of this was that the camera looked up and down very quickly and at points clipped through the mountain.

Render 2

I then tried smoothing this out in illustrator, the effect of this was slightly reduced but accidentally introduced a backflip.

Render 3

Decided to use the previous SVG and draw out a smooth path. 2500 frames into the render I found that the path had been accidentally deformed sending the camera off the terrain model.

Render 4

Perfect

After this I imported the frames into After Effects and added the credits and syncronised the music with it.

IDAT204 – Global Record Collection

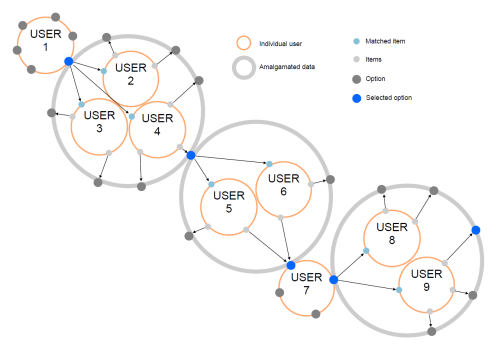

The aim of this system overall is to create a decentralised database containing information on all music using semantic web principles.

The original proposal outlines the idea of a standardised format for this information and a way to navigate through it that could potentially be a ‘killer application’ for the semantic web. With a field such as music, discovering new music isn’t something that is easily done with traditional web searches which require you to know what you are looking for to an extent and return cluttered and often unhelpful information. A GRC would work on the simple idea that individuals that like one thing are statistically inclined to also share a common like for another thing.

Operation

At first the software presents the user with data from a GRCML document that is either their own, one belonging to a specific person or entity or a community. This gives them a number of options as to which direction to go in, they select ‘Track A’ of which they already know. A query is then sent to a server with access to a collated copy of the entire GRC along with a user ID and session key to prevent repeat information being returned. The server then generates a list of the most popular music that people who have ‘Track A’ also have. The user ID allows the server to lookup what the client already has and the session key allows the server to keep track of what not to show again. A GRCML document is then generated and returned to the software which displays the new information allowing the user to explore it. A user could then select another piece of music within and repeat this indefinitely.

On the servers

Systems managing this data would need to crawl the web for GRCML documents and metadata. This data would need to combine this data removing duplicates and verifying the data’s integrity using some form of reliability ranking system and a mechanism to correct errors such as spelling mistakes. Each user and each track would be designated a unique ID allowing a relational database to be formed. Queries would be sent through URLs to the server containing the unique ID of the item selected, the data base would be searched and return the unique IDs of all or a random sample of the users with the same item, each of these would then be searched and then the results from each merged and ranked by popularity. From this a new GRCML document is generated and sent to the user. A generated example of the response is available here.

An individual item with a unique ID.

<song id="fz54n0aw2nw576d"> <artist>Pendulum</artist> <title>Granite</title> <track>9</track> <album>In Silico</album> <art>In Silico.jpg</art> <bitrate>227300</bitrate> <length>4:42</length> </song>

Interface design

There is large scope for a number of interfaces for navigating a GRC. I opted for a design which would be intuitive on touch screen handheld devices.

Demo download

A copy of the interface with a mock GRCML dataset is available here:

Windows

OSX

Twitter adaptation

The same structure could be used for searching other related data where the user does not know exactly what they are looking for but are interested in branching out from what they already know. I adapted my interface to use data from twitter. This allows the user to browse through all the people a person follows. They can then select a person of interest and see who they follow.

Currently it only starts from my twitter account and is slow to load new data. Navigate with the arrow keys and select with the enter key.

Processing.js version

IDAT211 – Sources of inspiration

One of my sources of inspiration for my audio generated terrain project is the following sequence from 2001: A Space Odyssey.

Another would be Blade Runner for its use of atmospheric effects to give a sense of scale to the buildings.

Test footage from the game Minecraft which prompted me to experiment with terrain generation.

IDAT205 – Pluxel.co.uk

pluxel.co.uk is now live.

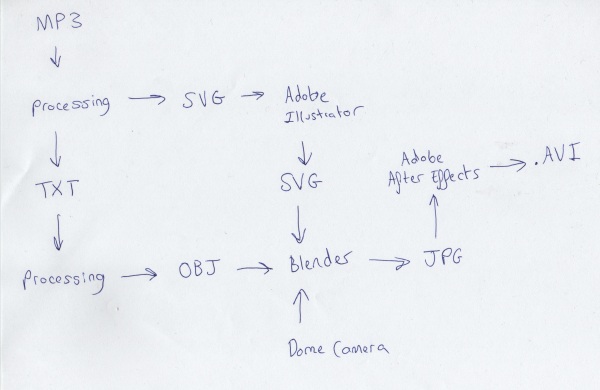

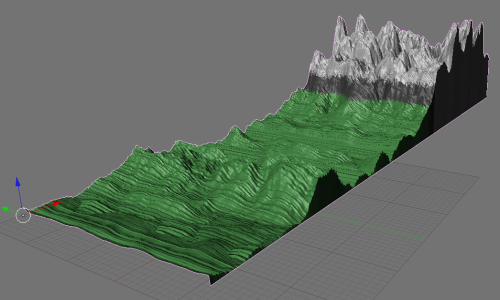

IDAT211 – MP3 to Blender

Inorder to display my project correctly in the dome it needs to be recorded within a program with a special camera. Unfortunately this isn’t easy to do in processing so I have chosen to use blender instead. I have adapted my code to generate an .obj file instead of rendering. Coupled with a .mtl file I am now able to import the content into blender.

The mp3 audio data is collected by the minim library for Processing in real time at regular intervals and then outputted into a .txt file. This file is then read by the second processing sketch which generates the .obj file for use in blender.

mp3 -> minim -> processing -> .txt -> processing -> .obj -> blender -> .jpg -> after effects -> .avi

IDAT211 – Terrain Generation

For a while now I have been mucking around with terrain generation using processing, here are two test renders I have done. The first with a primitive algorithm to sample surrounding blocks to produce a smoothed terrain effect but suffered from a tenancy to create diagonal streaks. The second implements proper Perlin noise which is much more realistic and is isotropic.

I have decided to use this as the basis of my IDAT211 project to be displayed in the Immersive Vision Theatre. To add greater depth to it, the terrain will be generated from music which will play as it is displayed having a combined audio and visual effect.

IDAT205 – Pluxel

IDAT204 – Progress

From my mockup done using processing I initially decided to switch to using Flash actionscript for the interface but ran into issues with porting my existing code. So I decided to go back to my prototype and implement proper object orientated programming so that I could then use it for the project itself in a language I am comfortable using. I also decided to abandon the use of spherical layout in favour of a cylindrical one. This removed an element of complexity from the application and allowed for infinite lists while retaining the core principles of the design.

From there progress has better then expected and the interface itself is near completion with only the server-side portion of the system presenting any remaining challenge.

IDAT204 – Design

Despite choosing the conceptual pathway I intend to create a working system to demonstrate it. My project will comprise of two parts, the first of which is the the visual client-side application which displays the information and allows users to explore and the other is the server-side component which will process GRCML from multiple sources and create a form of distributed database. The client-side component will send a request and a new GRCML document will be generated and sent back. The design I have for the client-side application is mainly intended for touch screen use as it would be intuitive to use it in this way.