Displayed at Pixelache 2011 in Helsinki.

Category Archives: Music to Terrain

IDAT211 – The .OBJ file

In-order to create code output the terrain I had to pick and understand a 3D model file format.

I explored a number of formats by exporting a flat square from blender and loaded the resulting file into a text editor from this I found the simplest format for me to use which was Wavefront OBJ. The advantages were that it was relatively simple to adapt my terrain code to to output, it was relatively light preventing the model file becoming too large.

mtllib mat.mtl

usemtl sand

v 1 0.0 1

v 1 0.0 2

v 2 0.0 2

v 2 0.0 1

f 1 2 3 4

usemtl sand

v 1 0.0 2

v 1 0.0 3

v 2 0.0 3

v 2 0.0 2

f 5 6 7 8

usemtl sand

v 1 0.0 3

v 1 0.0 4

v 2 0.0 4

v 2 0.0 3

f 9 10 11 12

...

mtllib – ource of the materials

usemtl – material for the face

v – location of a point

f – points to join to make a face

The output portion of my code that writes the .obj file:

for (int x = 1; x < musicArray.length-1; x++) {

for (int y = 1; y < rows-1; y++) {

data[len] = "usemtl sand";

if(Zarray[x][y] > 3)data[len] = "usemtl grass";

if(Zarray[x][y] > 35)data[len] = "usemtl mountain";

if(Zarray[x][y] > 60)data[len] = "usemtl snow";

data[len+1] = "v " + x + " " + Zarray[x][y] + " " + y;

data[len+2] = "v " + x + " " + Zarray[x][y+1] + " " + (y+1);

data[len+3] = "v " + (x+1) + " " + Zarray[x+1][y+1] + " " + (y+1);

data[len+4] = "v " + (x+1) + " " + Zarray[x+1][y] + " " + y;

data[len+5] = "f " +face+ " " +(face+1)+ " " +(face+2)+ " " +(face+3);

face+=4;

len+=6;

}

println((x/(musicArray.length/100)) + "%");

}

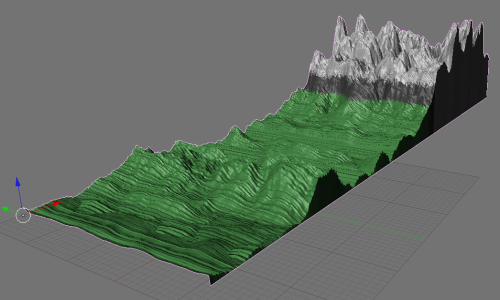

IDAT211 – Materials

As I was using the .OBJ format I needed to use a .MTL file for the materials. When an .OBJ file is imported into blender it also imports MTL files referenced within it.

I decided not to use textures for a number of reasons, the main one was that I wasn’t attempting to make it realistic but still recognizable.

The .MTL file I used in the final version was:

newmtl sand

Kd 1.000 1.000 0.600

illum 0

newmtl grass

Kd 0.300 0.700 0.400

illum 0

newmtl mountain

Kd 0.700 0.700 0.700

illum 0

newmtl snow

Kd 1.000 1.000 1.000

illum 0

newmtl water

Kd 0.400 0.400 1.000

illum 0

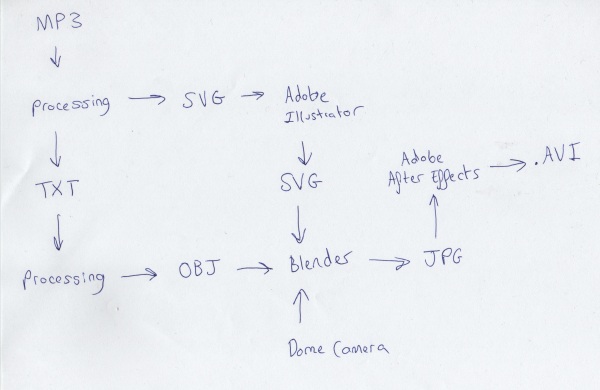

IDAT211 – The Process

IDAT211 – Rendering

The render time was approximatly 10 hours for the 3000 frames at 1400 x 1050, saving each as JPEGs.

Render 1

My original plan was to directly convert the sound data to the path of the camera. The effect of this was that the camera looked up and down very quickly and at points clipped through the mountain.

Render 2

I then tried smoothing this out in illustrator, the effect of this was slightly reduced but accidentally introduced a backflip.

Render 3

Decided to use the previous SVG and draw out a smooth path. 2500 frames into the render I found that the path had been accidentally deformed sending the camera off the terrain model.

Render 4

Perfect

After this I imported the frames into After Effects and added the credits and syncronised the music with it.

IDAT211 – Sources of inspiration

One of my sources of inspiration for my audio generated terrain project is the following sequence from 2001: A Space Odyssey.

Another would be Blade Runner for its use of atmospheric effects to give a sense of scale to the buildings.

Test footage from the game Minecraft which prompted me to experiment with terrain generation.

IDAT211 – MP3 to Blender

Inorder to display my project correctly in the dome it needs to be recorded within a program with a special camera. Unfortunately this isn’t easy to do in processing so I have chosen to use blender instead. I have adapted my code to generate an .obj file instead of rendering. Coupled with a .mtl file I am now able to import the content into blender.

The mp3 audio data is collected by the minim library for Processing in real time at regular intervals and then outputted into a .txt file. This file is then read by the second processing sketch which generates the .obj file for use in blender.

mp3 -> minim -> processing -> .txt -> processing -> .obj -> blender -> .jpg -> after effects -> .avi

IDAT211 – Terrain Generation

For a while now I have been mucking around with terrain generation using processing, here are two test renders I have done. The first with a primitive algorithm to sample surrounding blocks to produce a smoothed terrain effect but suffered from a tenancy to create diagonal streaks. The second implements proper Perlin noise which is much more realistic and is isotropic.

I have decided to use this as the basis of my IDAT211 project to be displayed in the Immersive Vision Theatre. To add greater depth to it, the terrain will be generated from music which will play as it is displayed having a combined audio and visual effect.